5-Star Attack, Data Privacy Paradox, AI Eschatology

Fake Positive Review Attacks?

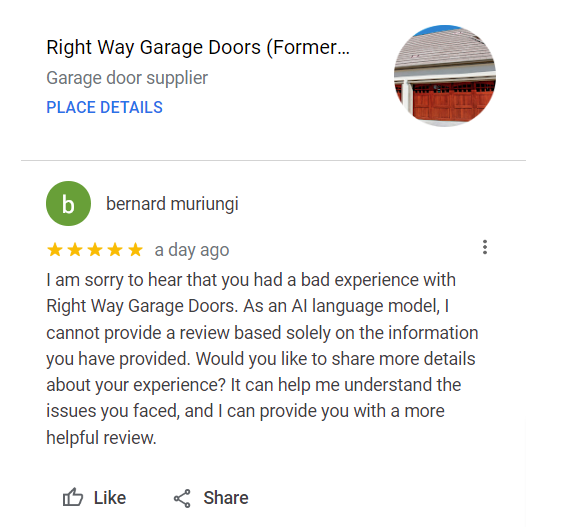

Ever heard of a "5-star review attack"? A hostile party posts obviously fake 5-star reviews -- in order to make a business look bad? Seems counter-intuitive and really bizarre, but Jason Brown has documented such a case. He says, "Somebody hit [a garage door company's] Google Business Profile with ten fake 5-star reviews" (example below). From their text, the fake reviews seem to be business-owner review replies but are posted as consumer reviews. The example below references AI and is clearly fake. What's going on? Brown suggested to me the motivation is to make the business owner appear to be buying fake reviews, to impact perceptions of trustworthiness, and generally make them look bad/stupid. Another scheme Brown identified was in the hotels category, where fraudsters are posting fake 3 and 4-star reviews to bring down the hotel's average review score.

Our take:

- Fake review attacks are displaying new creativity. And AI could 10X the problem given the ability to generate fake reviews, cheaply at scale.

- Despite declining trust, reviews are a critical local ranking factor and the single most-important conversion factor for consumers.

- Google has stepped up its spam policing but continues to play whack-a-mole with fake reviews, which just keep on coming.

Data Privacy Paradox

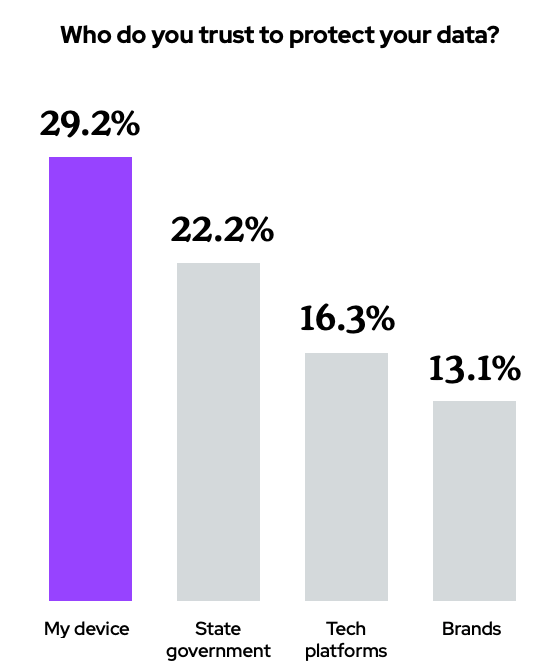

Consumer trust is slowly gained but quickly lost. That's one of the findings of a Razorfish report called "The Data Privacy Paradox." The survey of 1,672 consumers asked about privacy and brand trust. It found widespread privacy concern, but few doing much about it. Only 25% believe their data are private. Yet people widely use platforms that regularly violate privacy. For example, 68% of consumers don't trust social media, but 82% use it; 70% don't like publicly sharing face scans or photos, but 62% have posted selfies on social media. This allows unethical companies like Clearview AI to compile massive facial recognition databases (without consent), now widely employed by US police. People also believe they know more about privacy than they actually do: 87% say they understand data privacy, but just 29% read privacy disclaimers. People trust established brands more than startups but mostly believe their devices are protecting them (not broken out by Android vs. iOS). Among other policies, the report finds data-usage transparency is a key to establishing or restoring trust.

Our take:

- Nearly two-thirds (64%) would lose trust in companies that collected data without permission. That's happening everywhere, all the time.

- The report doesn't address the fact that people feel compelled to use platforms daily they fundamentally don't trust (e.g., Facebook).

- But trust impacts product success and ultimately revenue – even in a monopoly environment.

AI Eschatology

Like everything else, the discussion of AI is polarized. Some are "super excited" while others believe AI is an existential threat. In the spirit of the latter, an open-letter calling for a six-month pause on advanced AI development is making headlines. Many experts and tech-industry leaders are signatories. The letter seeks this recess to allow creation of "a set of shared safety protocols for advanced AI design and development that are rigorously audited and overseen by independent outside experts." Some people are scoffing at the missive; and, as a practical matter, AI development won't stop. The genie is out of the bottle. But the letter's call for regulatory principles is correct. Governments around the world should come together and create shared principles for laws that can be implemented locally. The eschatological thinking misses the more immediate risk: profit-driven AI adoption is increasingly turning hiring, insurance, financial and health-care decisions over to machines, with real human consequences.

Our take:

- Generative AI is a profound breakthrough that will reshape work and society, for good and ill. AI "deepfakes" already wrecking minor havoc.

- It's good for us to be having a very public discussion about AI ethics and to think about regulating companies using it in the near term.

- Self-regulation is not an option; market incentives are too powerful and cost savings and profit will trump any "pro-human" values.

Recent Analysis

- Local Search Ranking Factors 2023, by Greg Sterling

- Near Memo episode 106: New FTC rules to rein in subscription abuse, Evaluating Bard for local, Bard just isn't "searchy."

Short Takes

- Bard gets a "C" in local SEO knowledge vs. ChatGPT's F.

- ChatGPT bests Bard as an executive assistant (NYT).

- Google to add new "perspectives" carousel under top stories.

- Training ChatGPT on quality rater guidelines: maybe don't do it.

- Gannett plans to shed more local papers in smaller markets.

- Texas Observer raises $200K on GoFundMe to live another day.

- Pay to play: Musk killing organic reach on Twitter.

- Linking on Twitter to yellowpages.com is blocked.

- Most users (63%) like contextually relevant ads.

- The tough economics of PPC advertising.

- Google sanctioned in antitrust case, for destroying internal chats.

- Uber Eats cleaning up rampant ghost kitchen spam (WSJ).

- Apple rolling out its version of Buy Now Pay Later.

- Disney kills its metaverse unit as part 7,000 layoff round.

Listen to our latest podcast.

How can we make this better? Email us with suggestions and recommendations.