Personalized Google Rankings, Fake Reviews Thriving, AI Trust Growing

Google to Personalize Rankings

At the recent BrightonSEO conference in San Diego, Google spokesperson Danny Sullivan said there were major changes coming to search – beyond the volatility of the past year or so. This morning Google at least partly delivered on that promise. First reported by Barry Schwartz, Google announced three new features: personalization, Notes and Hidden Gems. Hidden Gems will surface more "authentic" first person content from social media, blogs and online forums. Notes are annotations or comments on articles and will appear in the SERP in the Google app and on Google Discover. They're an opt-in Google Labs "experiment. There's a very good chance Notes will be a spam magnet, although Google is probably anticipating that. Finally, and most interestingly, is search personalization. There's a newish "follow" button in the SERP that influences what topics you see in Google Discover and can operate as a kind of filter for search results as well. Google will also implement personalized ranking: "if you’ve searched for something a few times ... and keep returning to the same web page, our systems will recognize that and bring the site to the top of the search results." For years Google said it doesn't (really) personalize results; now it will.

Our take:

- Notes, follow and personalization require being signed in. It's a binary choice; they're on or they're not, although you don't have to engage with them.

- Personalized ranking could be a radical change, although we'll have to see how it plays out in practice. There would likely be no way to optimize for it.

- These changes, in addition to others in the SERP, should be seen in the larger context of Google defending its monopoly against social media and ChatGPT.

Fake Reviews Are Thriving

On Monday Mike Blumenthal wrote about a NY state action against an Orthopedist caught trafficking in fake reviews. On the same day a lengthy article in the NY Times discussed the same doctor and the broader problem of review fraud, asking: can a regulatory crackdown eliminate the problem? In a word: no. The states and the FTC have limited resources to go after review fraud and have to focus on "high profile" cases they hope will deter others. But generally, the penalties are not sufficiently severe to actually discourage others. The Times article asserts that review fraud is a billion-dollar industry. If true, that would probably exceed the revenues of all the top reputation management companies combined. Although Google is making more of an effort to remove suspicious reviews these days, its automated systems can be both overbroad (false positives) and miss fake reviews. Amazon has also seemingly stepped up its fake review enforcement, announcing the Coalition for Trusted Reviews with Tripadvisor and several others. To some degree these moves help but there's also a way in which they're performative and designed to appease EU regulators whose DSA can impose significant financial penalties, unencumbered by a Section 230 liability shield.

Our take:

- Given the consumer reliance on reviews – stars and counts – together with Google's use of reviews as a ranking signal, the incentives to cheat are great.

- The DSA appears to be the main motivator behind the platforms' recent efforts to address review fraud. So there's hope; especially if somebody gets a big fine.

- It's unlikely however that fake reviews can be entirely eliminated, given their massive volume, and the platform reliance on automation to police them.

Survey: AI Trust Growing

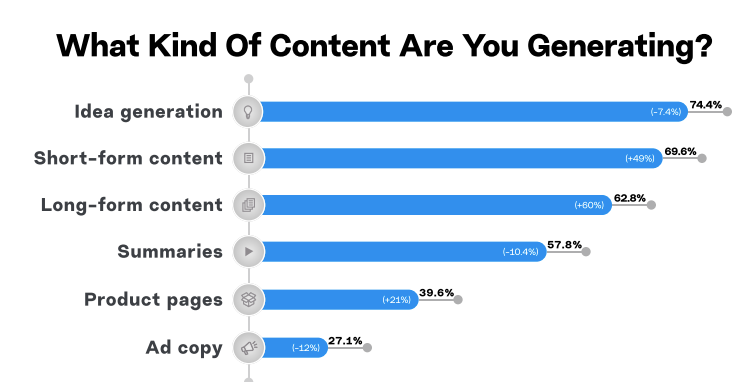

Marketers are bullish on AI to the point where a growing number aren't editing or fact checking AI-generated content. A Q3 survey on AI tool adoption by MarketMuse and agency iPullRank had "489 participants across various industries and company sizes." The two most represented groups were marketers and software company employees. The largest segment of companies (60%) had 5 and fewer employees; 15% had more than 100 employees. While there's a positive corporate consensus about the value of AI tools and their potential (64%), more adoption has been by individuals (57%) not officially at the organization or team levels (combined 35%). The most common AI use cases were text and image generation, with some video creation. The most popular tools were OpenAI/ChatGPT (77%), Midjourney (15%), Bard (10%), Bing Chat (7%), Jasper (3%) and Writer (2%). Generating ideas, short and long-form content and "summaries" were the most common use cases, though marketers are also creating product pages and ad copy with AI. Almost 70% of respondents positively rated the quality of AI output as a 4 or 5 (on a scale of 5). And nearly three-fourths of respondents (72.5%) expressed trust in AI tools and content. Indeed, "about 10% of users [are not] editing AI-generated material" or checking it for errors – a 75% increase over the previous survey.

Our take:

- ChatGPT obviously has the greatest awareness among AI tools and perhaps the greatest utility, given the gap between its adoption and everything else.

- There are many more SaaS tools now with AI enhancements (e.g., Microsoft Copilot). I suspect the choices were aided response and thus incomplete.

- While hallucination is declining, the nearly 10% who didn't edit or fact check their AI output is sort of alarming. But that will grow as AI trust grows.

Recent Analysis

- Why Citations Ain't What They Used to Be, by David Mihm.

- Near Memo episode 134: Google Maps bloat?, citations and their declining value, Google’s new SMB attribute: helpful or performative?

Short Takes

- After antitrust trial, Google acknowledges use of behavioral signals.

- Google's AI weather prediction tool: highly accurate 10-day model.

- Some of ChatGPT's appeal about its ad-free UI.

- Yandex getting out of Russia by entirely selling Russian assets.

- Fake Bard downloads on social media spread malware.

- Google News seeing more "AI copies" of actual articles.

- Apple gets 36% of Google search revenue generated via Safari.

- Like Meta, Snap users can now buy from Amazon without leaving the app.

- Amazon about to flood Fire TVs with ads.

- Apple's deal with Amazon blocks competitor ads from Apple product pages.

- EU regulators seeking to block Adobe's $20B acquisition of Figma.

- US judge rules social media companies must face "addiction" lawsuits.

- Self-checkout may be on the way out.

- Telsa building a diner with chargers in LA – a model for national chain?

Listen to our latest podcast.

How can we make this better? Email us with suggestions and recommendations.