Google Ranking Leaks, Long-Tail Citations, AI Overview #Fails

Google Rides the Clickstream

The various exhibits from the recently concluded Google antitrust trial surprised many, delighted others and contradicted Google's repeated public statements about clicks and other behavioral signals (e.g., dwell time) not impacting rankings. The opposite has turned out to be true. Now a leak of Google Search API Documents, provided to Rand Fishkin (overview) and analyzed by Mike King (technical), reinforces the centrality of clickstream data to the algorithm. (Fishkin explains why he believes the documents are authentic; see also the video below.) Some of the material will be familiar; much of the detail is new. There are skeptics who question whether there's anything truly revelatory here. Regardless, there's too much information to summarize. But here are a few of Fishkin's observations: intent-tracking Navboost (which relies on click data) may be the single most powerful ranking factor; Chrome clickstream data influences rankings; Google rewards known brands (not new info) vs. smaller/newer companies; traditional SEO may not help SMBs until they are local "brands" (but see local pack); E-E-A-T may not matter as much as people think; quality rater data probably directly influences rankings. King's lengthy post unpacks the technical details, which contain implied tactical recommendations. His big conclusion, however, is "make great content and promote it well."

Our take:

- Another finding: Google carefully tracks and categorizes clicks, as proxies for intent and CX. It looks at time on site as evidence of a "good" click.

- People will be analyzing this data for some time and it will likely impact future strategy and tactics in the SEO community.

- In the video above, the leaker Erfan Azimi (not a ex-Google employee), speaks about why he leaked the data (via SEL).

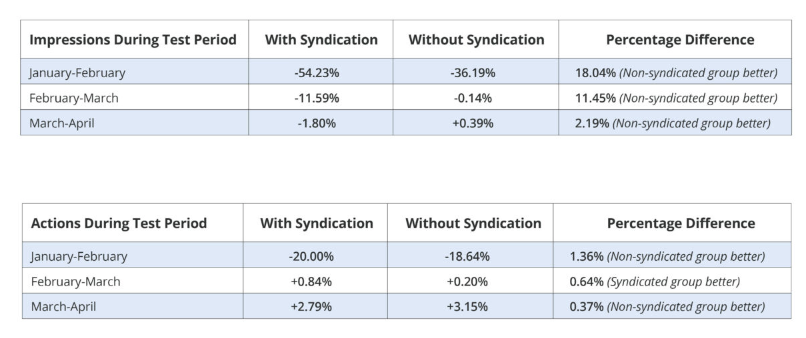

Citation Study: Long-Tail Is Dead

At one point, roughly a decade ago, NAP (name, address, phone) consistency and distribution to a wide range of online directories was seen as a critical local ranking factor – if not the top ranking factor. However the relative importance of citations or listings syndication has been in decline for years. There's now a local SEO consensus that long-tail directories deliver little or no value for Google local rankings. However, being on major platforms and key vertical directories still matters. SOCi, which has a listings distribution component to its business, recently conducted a seven month study involving "three multi-location brands in the retail and healthcare industries," with a total of 451 locations, randomly divided into control and exposed groups or a non-syndicated group (major platforms only) and a syndicated group (platforms + long-tail directories). SOCi tracked impressions and actions (calls, clicks, directions). What the company found was that at the end of the seven-months the long-tail syndicated group was no better off than the control group, which actually slightly outperformed it. This comes as no surprise given the local SEO discussion over the past several years.

Our take:

- At Uberall I helped initiate a 2019 study of 115K business locations (US, EU) using a similar methodology. However, we did find "meaningful increases in visibility and engagement" for listings optimized across the network.

- Google used to rely on NAP consistency as a trust signal. With GBP it doesn't have to. The consumer side of local also used to be competitive. With the exception of Yelp and a couple of others, most old competitors are now gone.

- Listings management still has value, but mostly on the major platforms and key vertical directories that have consumer traffic and rank for relevant keywords.

AI Overviews = New Google+?

Unless you've been recently hospitalized, having put glue in your pizza sauce, you'll know that Google's AI Overview rollout is not going according to plan. Major news outlets have captured a list of high-profile mistakes, bad answers and more egregious errors (e.g., glue in the pizza sauce) that have been showing up at the top of search results. There's now even a dedicated Twitter/X account. And this isn't isolated; a lot of people are now tracking the problems of Google AI Overviews. A Google spokesperson told the NY Times that the vast majority of AI Overviews deliver "high-quality information, with links to dig deeper on the web." While that may be, as a matter of statistical accuracy, the sometimes funny, sometimes disturbing mistakes are what's getting attention. People have made the point that Google had a year to work out the bugs before it chose to roll out AI Overviews. It's mysterious because you'd think they'd want to get this right given all the scrutiny and pressure. In an exasperated LinkedIn post, SEO Aleyda Solis quips, "It seems that most of their concerns were about their monetization viability and impact rather than the quality." Google CEO Sundar Pichai has been giving interviews in which he's praised AI Overviews ("grounded by search") and how they're improving the search experience. Not exactly.

Our take:

- Separately, there's a bit of an AI Overview backlash going on among some users who want to turn it off. There's no opt-out or off switch (there is with Bing).

- This negative PR, combined with unflattering antitrust revelations, will add fuel to the narrative of Google's decline. It could impact the Google brand.

- It appears that SGE was a reactive product, allegedly driven by fear (reportedly like Google+). Or perhaps the company really has spent more time on the advertising angle than quality control, which may have just been assumed.

Recent Analysis

- Near Memo podcast ep. 158: New SAB local ranking factor; interpreting Google AI tea leaves around search.

Short Takes

- Google Local has top "share of voice" on SERP when it appears.

- Moz: How to write a "positive" negative local review.

- Google Business Messages is going away in July of this year.

- Former Uber exec: Apple Maps better than Google for fastest routes.

- Perplexity the winner of WSJ test of five major AI engines, including Gemini.

- Google Lens and Circle to Search beefed up with links, AI Overviews.

- Digital marketers had mixed reactions to Google Marketing Live 2024.

- Apple AI strategy: make AI more practical for ordinary users.

- Apple signs deal with OpenAI but wants to preserve Gemini "as an option."

- TikTok testing hour-long video uploads (which would totally change TikTok).

- Elon Musk's xAI announces a $6 billion fund raise.

Listen to our latest podcast.

How can we make this better? Email us with suggestions and recommendations.