Is Apple's Siri Sherlocking* Google Search?

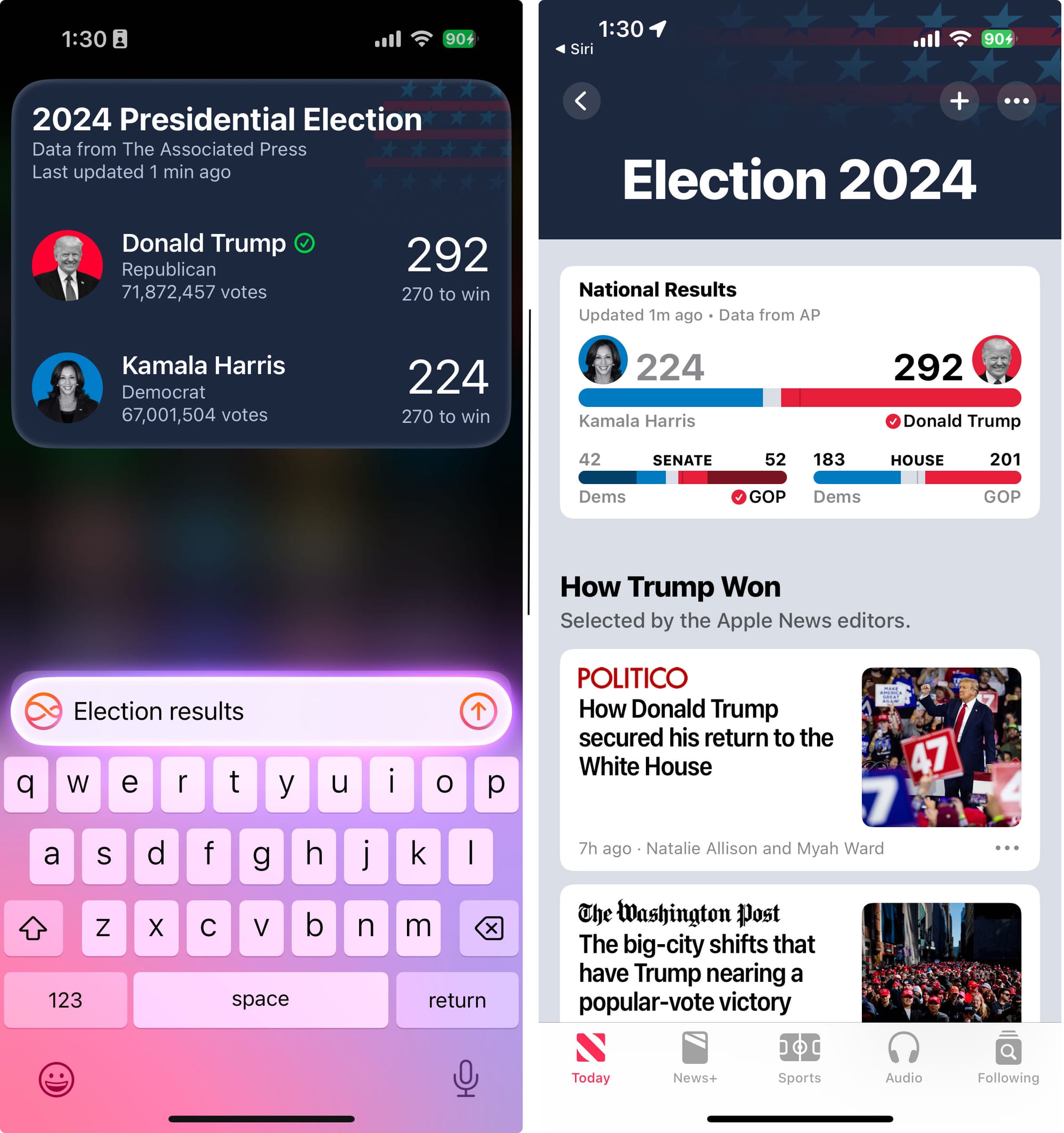

iOS 18.2 is coming this week, with new AI features and ChatGPT Siri integration. The buzz is likely to increase consumer interest in Siri. More intriguing is Siri's growing use of Apple knowledge graphs to answer many queries directly on device and its potential impact on Google.

This article has been updated on 12/12/24 with results of the ChatGPT integration.

The discovery of Siri's improved Local Search capability led me to ask: Is the new Siri capable of similarly answering questions across other knowledge domains? Could Siri pull off the biggest Sherlock* ever by integrating features to replace much of what search does?

Siri and Spotlight search have long had the ability to surface types of knowledge that aren't limited to local entities. In fact, the newly reconstituted assistant has access to many in-depth knowledge graphs that Apple has been building out since 2012.

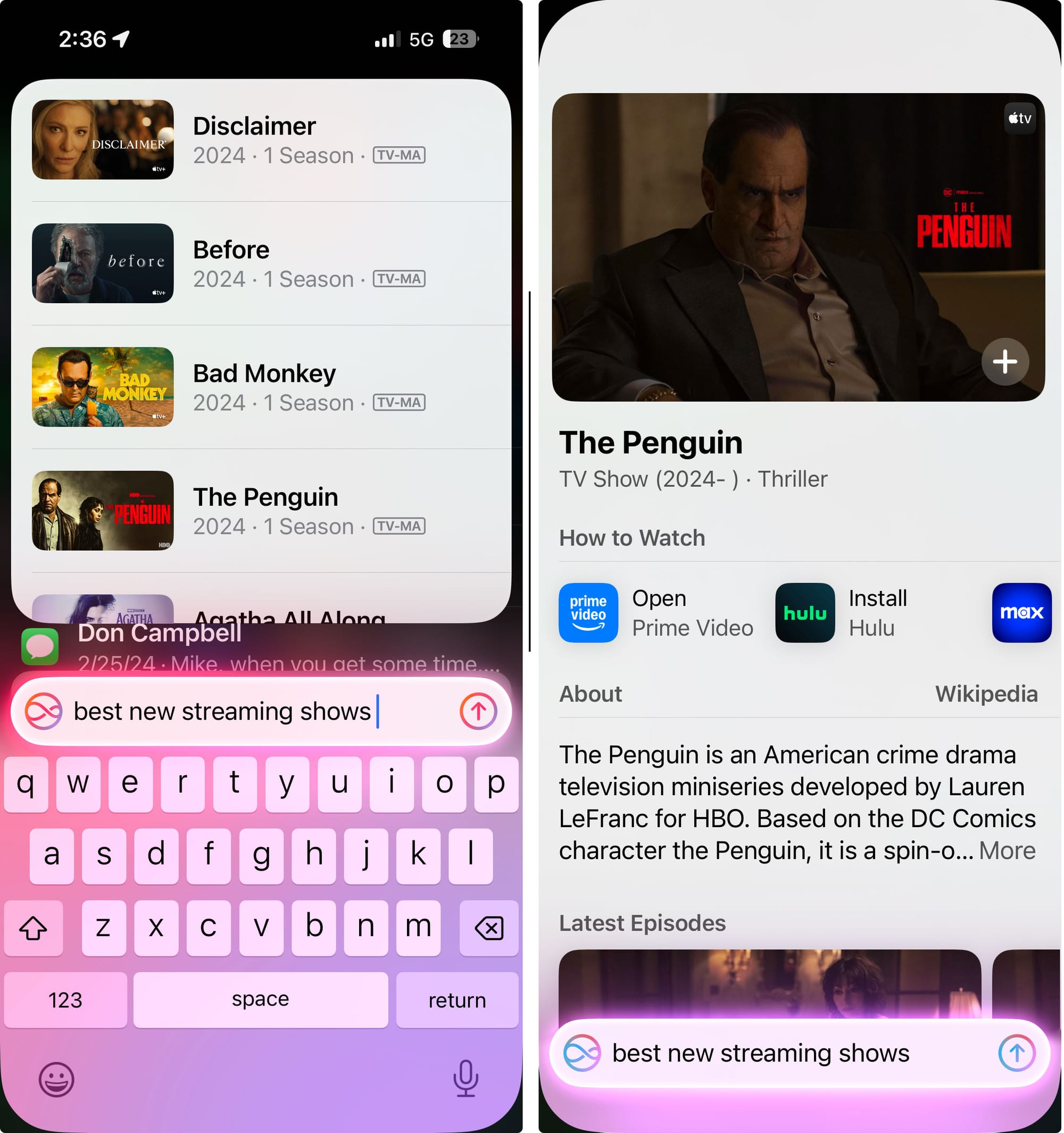

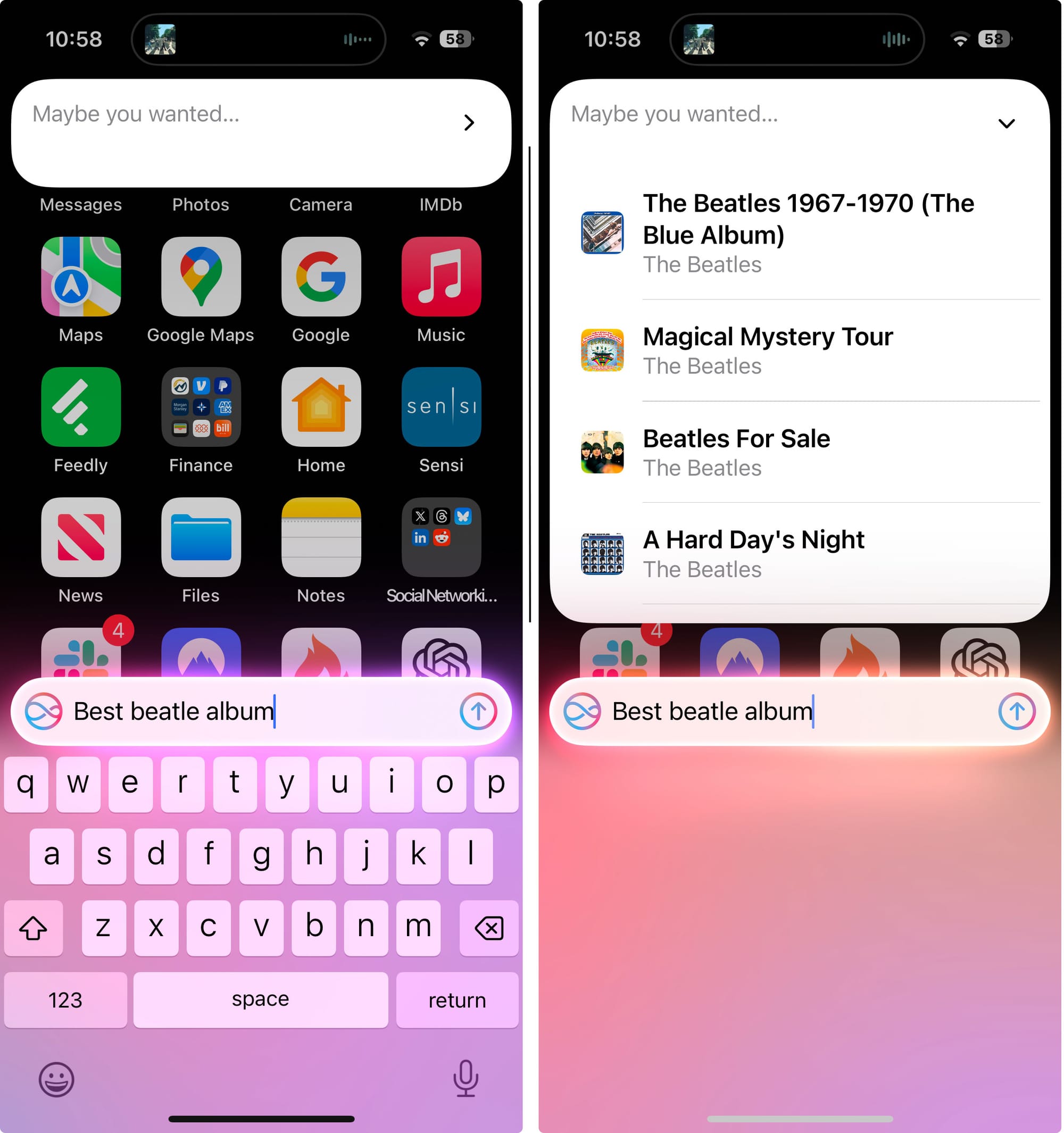

Siri 2.0 brings the ability leverage AI to remember the context of your initial query and provide follow-on answers and, importantly, it can now be accessed through text entry and not just voice.

Many of these features are still in their early stages, but they hint at a future where more of the questions we currently ask Google are answered directly on our phones—instantly, seamlessly and without interrupting what we’re doing, without opening a browser, or launching an app.

Apple Builds Out Knowledge Graphs

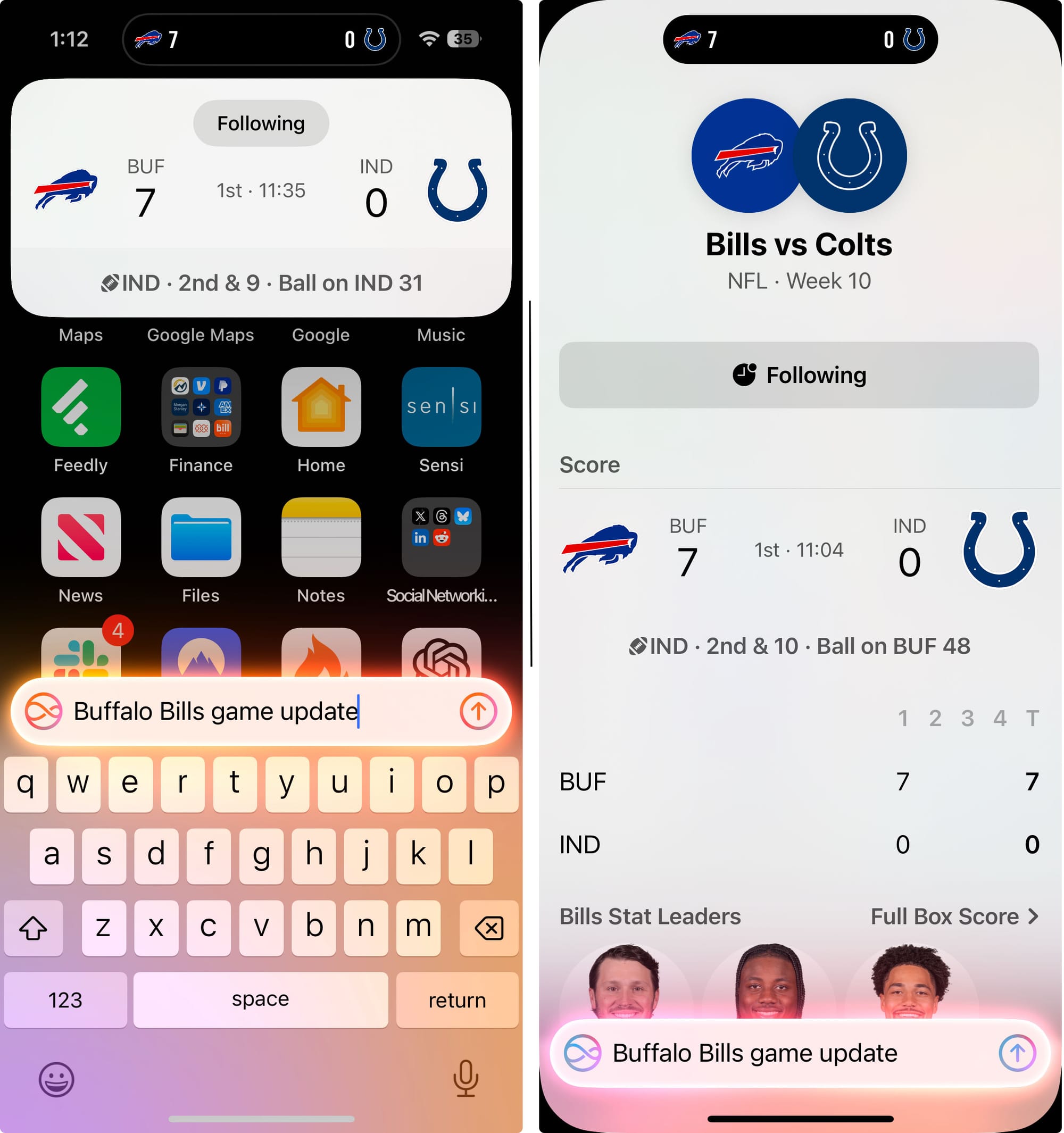

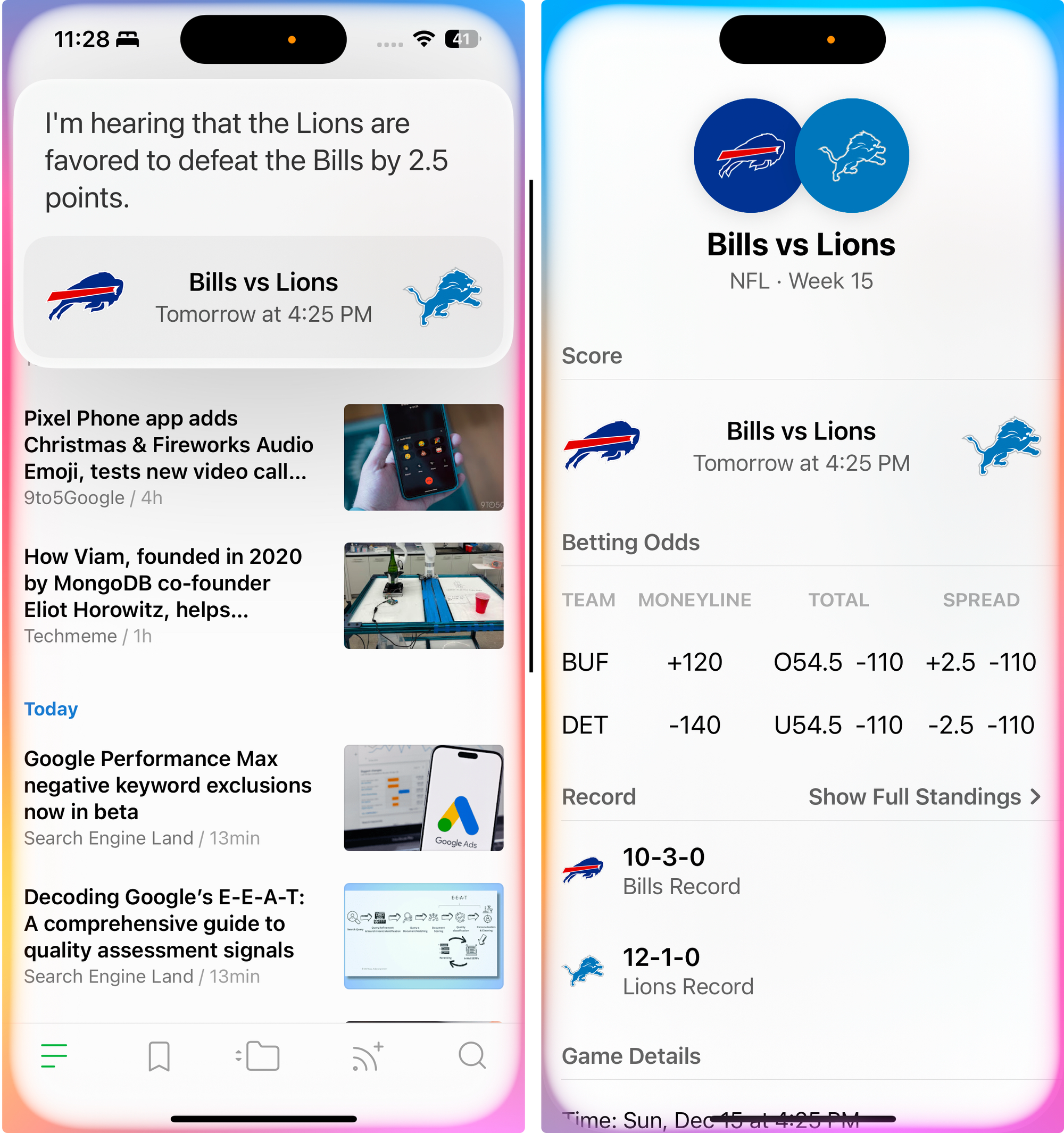

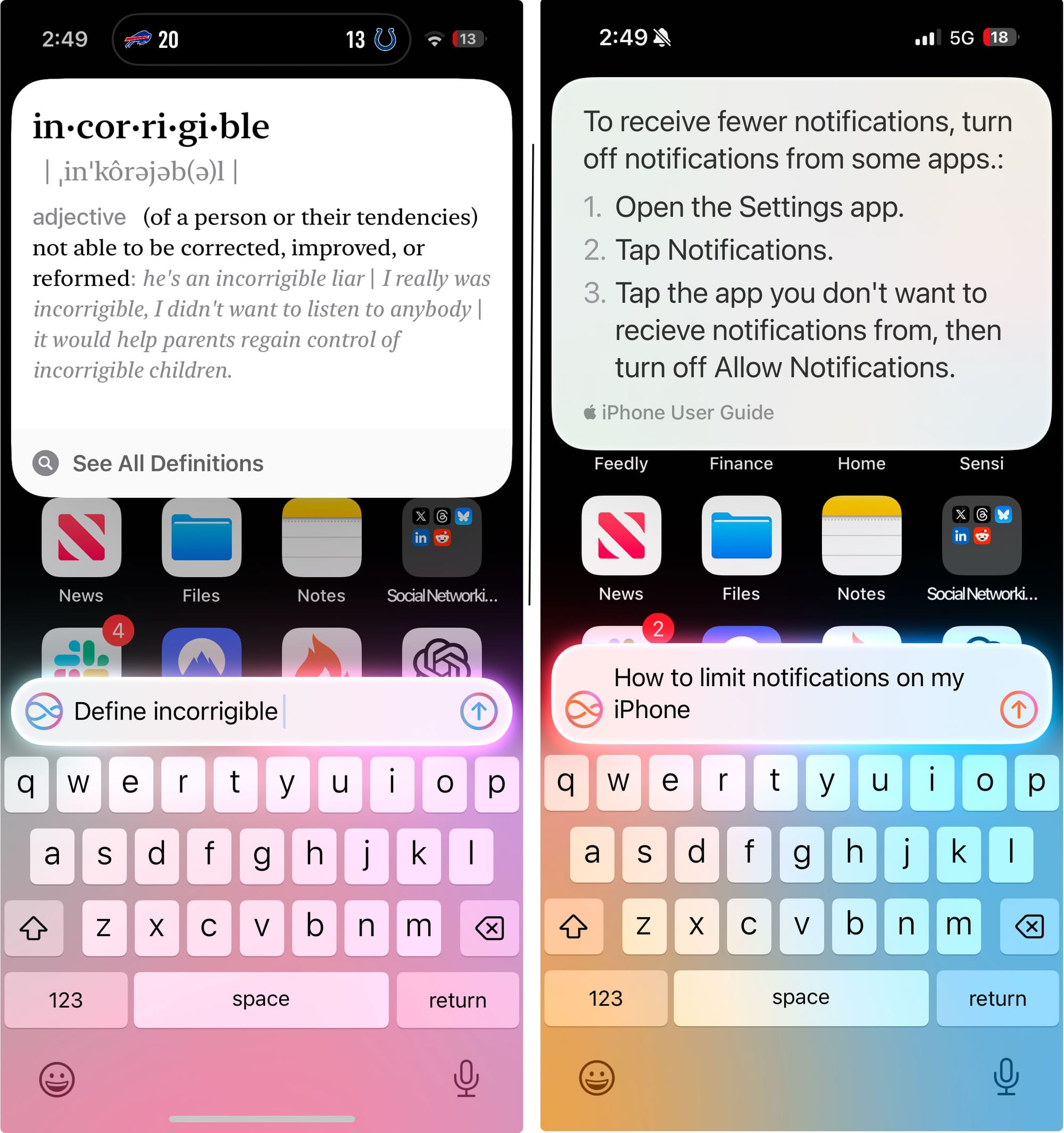

Many of the answers that we see in Siri are based on knowledge graphs that Apple has built for specific applications and cover a broad range of potential user interests. Apple is answering questions directly on the device: questions about entertainment, movies, TV shows, sports, realtime sports scores, current events, news, stocks, music, weather, Wikipedia, Local POIs, the web and more. I even found evidence of Siri being able to perform product searches.

When Siri can't answer from one of the existing knowledge domains, it will access ChatGPT or Google search to answer.

For each of these inquiries, Siri presents a short pop-up summary screen, on the fly, over whatever you are doing on your phone. It then provides the user with easy, one-click access to a longer answer without going onto the open web. It's a very slick informational retrieval interface that allows the user to either explore the query in more depth or easily return to the iPhone task at hand.

Apple has also built out other knowledge graphs, like the long-standing dictionary and the new-to-iOS 18 ability to ask for instructions on how to interact with your iPhone settings.

Siri Looks to the Web?

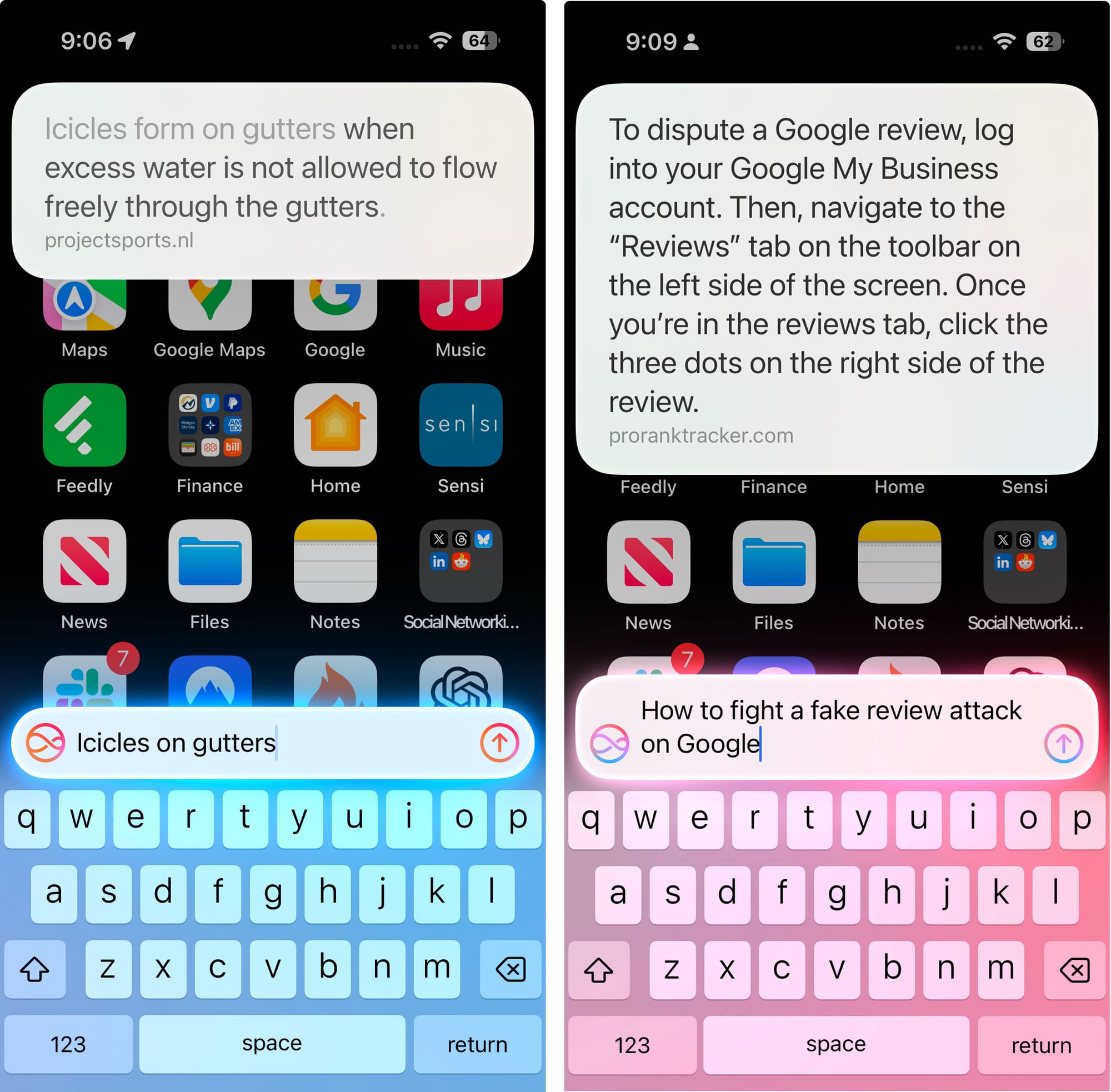

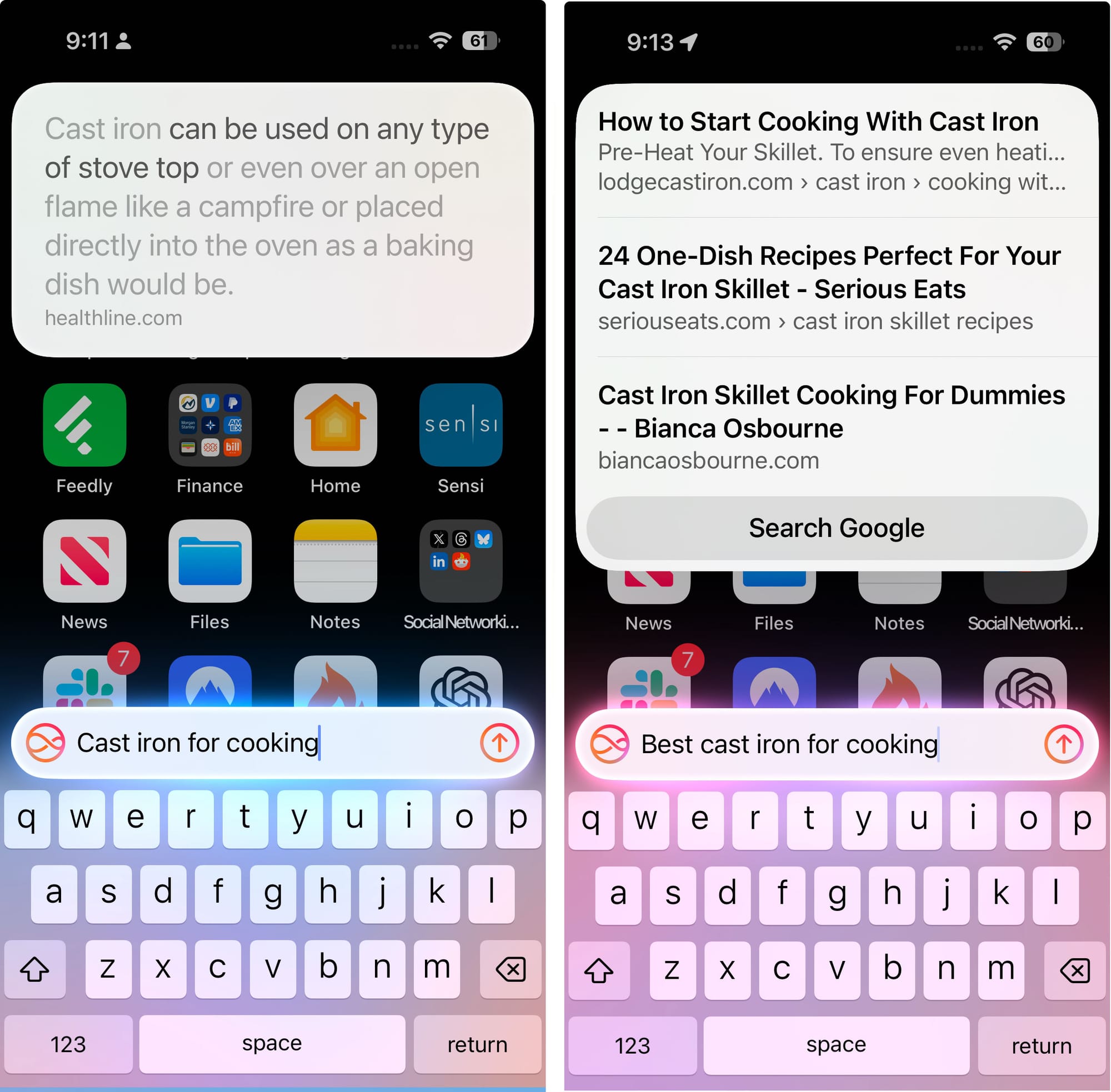

Besides surfacing more results from their internal knowledge graphs, Apple is surfacing more trusted web results via the new Siri interface as well.

When Siri determines "the answer" to a question is better provided by the web, it presents a single trusted result much like the "I feel lucky" Google result of days long gone by. With current News queries, it often presents three results to choose from.

Apple is known to have one of the largest web indexes outside of Google, with billions of websites indexed. It clearly leverages that index with an on-phone overlay that provides a direct answer but can also take the user directly to a particular website for an answer, totally sidestepping internet search.

It appears that when Siri lacks trust in its ability to provide an "I feel lucky" answer, it will head over to Google for the answer. Exactly when or how or why that decision is made is unclear.

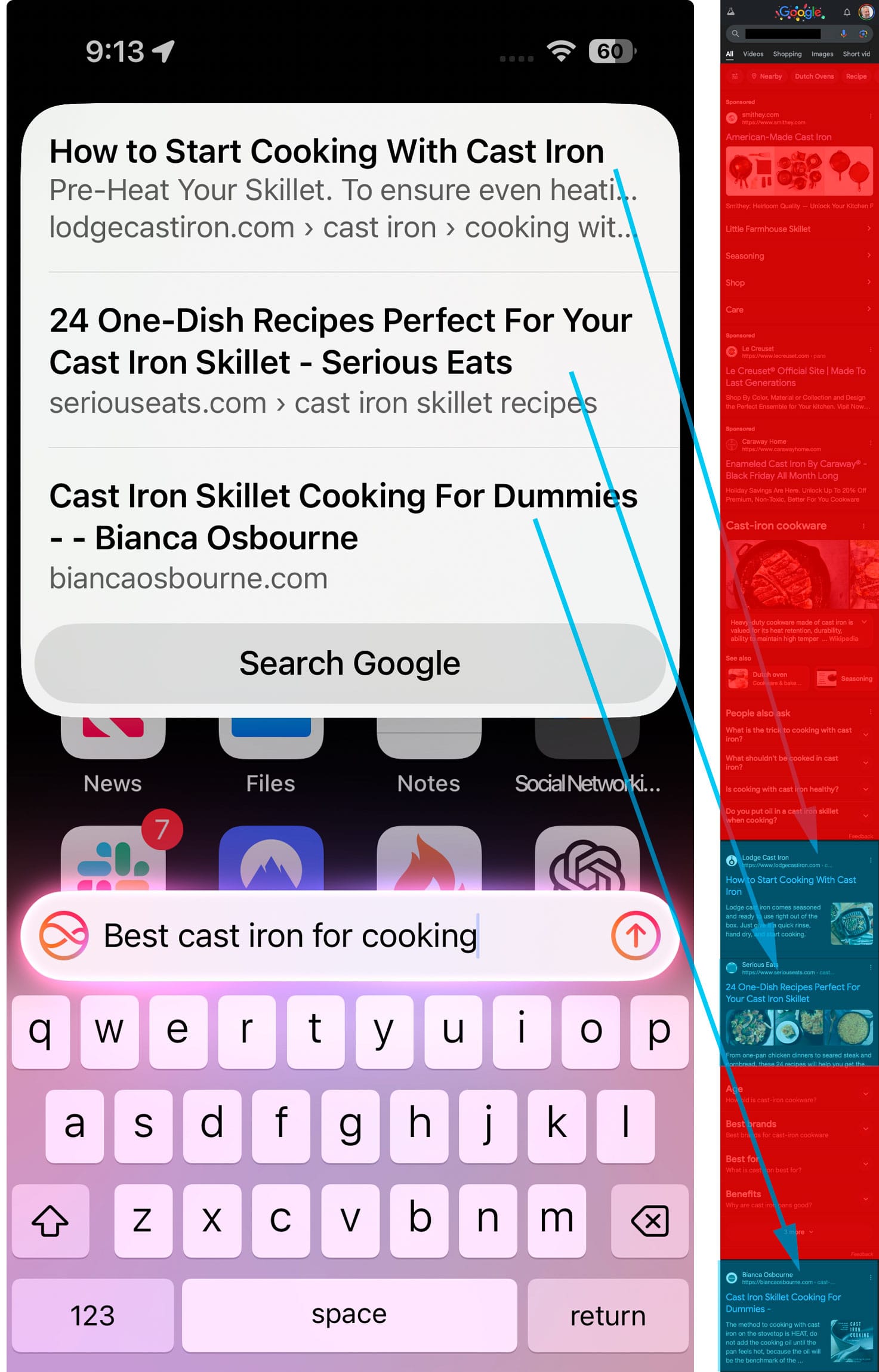

Sometimes Siri decides it needs to surface Google results. It will then strip out all of the ads, Knowledge Panels, People Also search for, People search next, Fast Pickup or delivery etc., and show the top three organic results. It also offers an opportunity to search further on Google.

iOS 18.2 Update

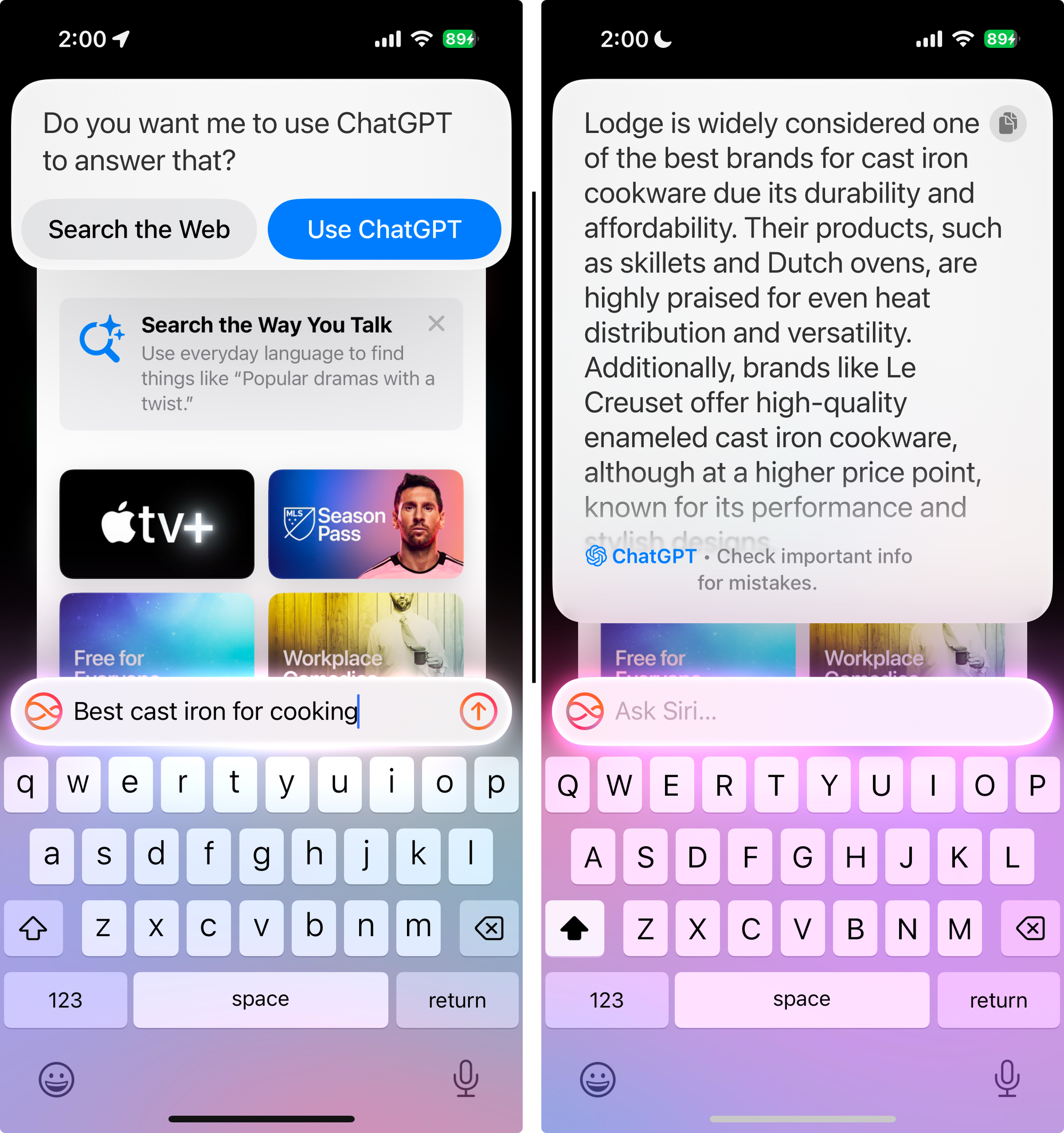

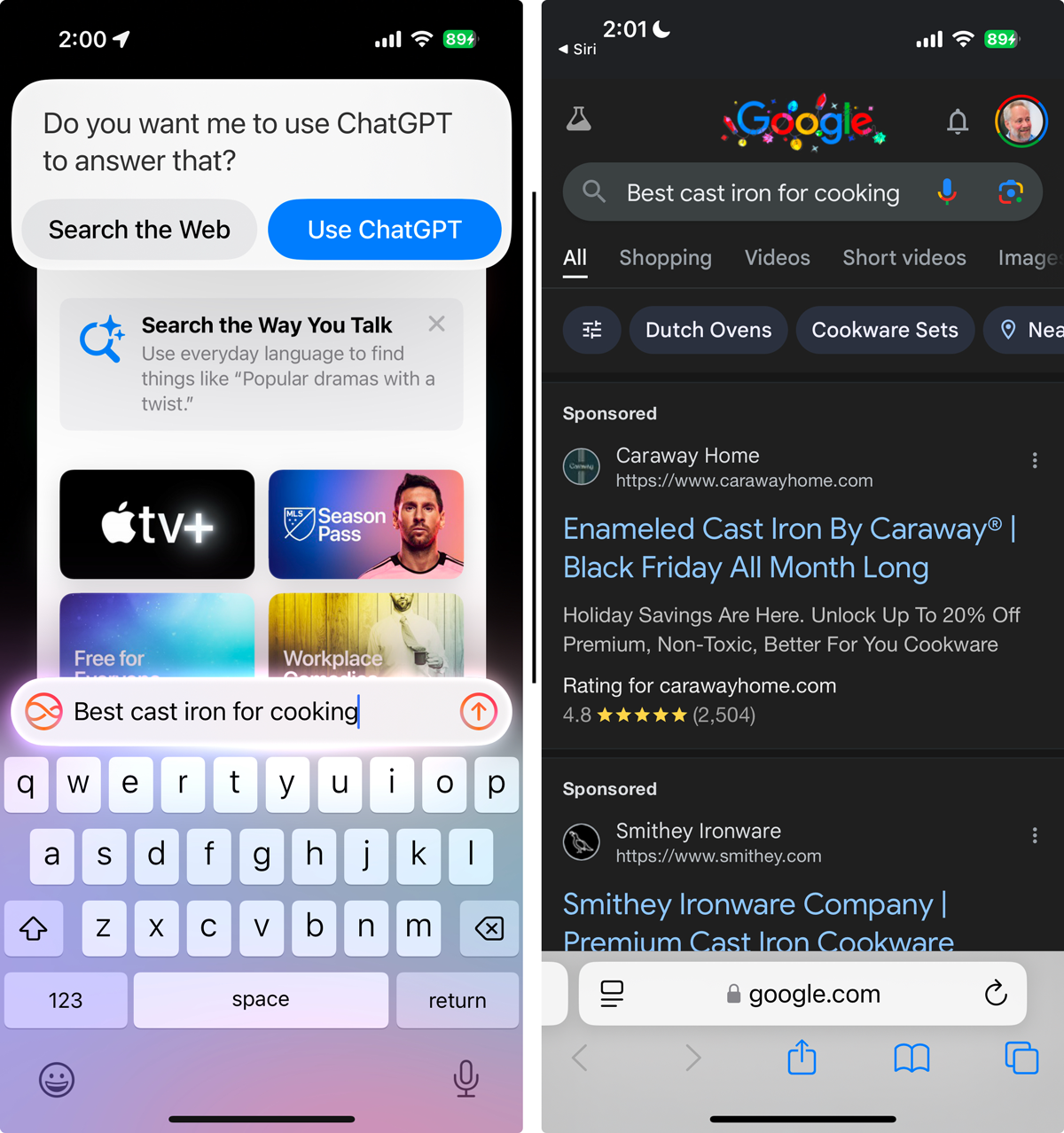

How have results changed with the rollout of iOS 18.2 on 12/11/24? I re-ran all my searches, and the only noticeable change is the addition of an intermediate pop-up before a user is directed to either web search or ChatGPT. In some ways, the new interface feels less user-friendly. It requires a decision (search or ChatGPT), an extra click, and is not particularly speedy. If the information in the ChatGPT box exceeds its size, it can be scrolled but is not persistent, and there’s no way to recall it if you touch another part of the screen. However, you can continue and follow up on the conversation via Siri and the details are stored in your ChatGPT account.

When choosing the "Search the web" option, it performs the search directly on the user’s preferred search engine —Google in my case– and opens the results directly in Safari. This approach is faster and persistent, unlike the ChatGPT responses. However, the downside is a more cluttered Google interface that you perviously could avoid.

Recipes and More Recipes

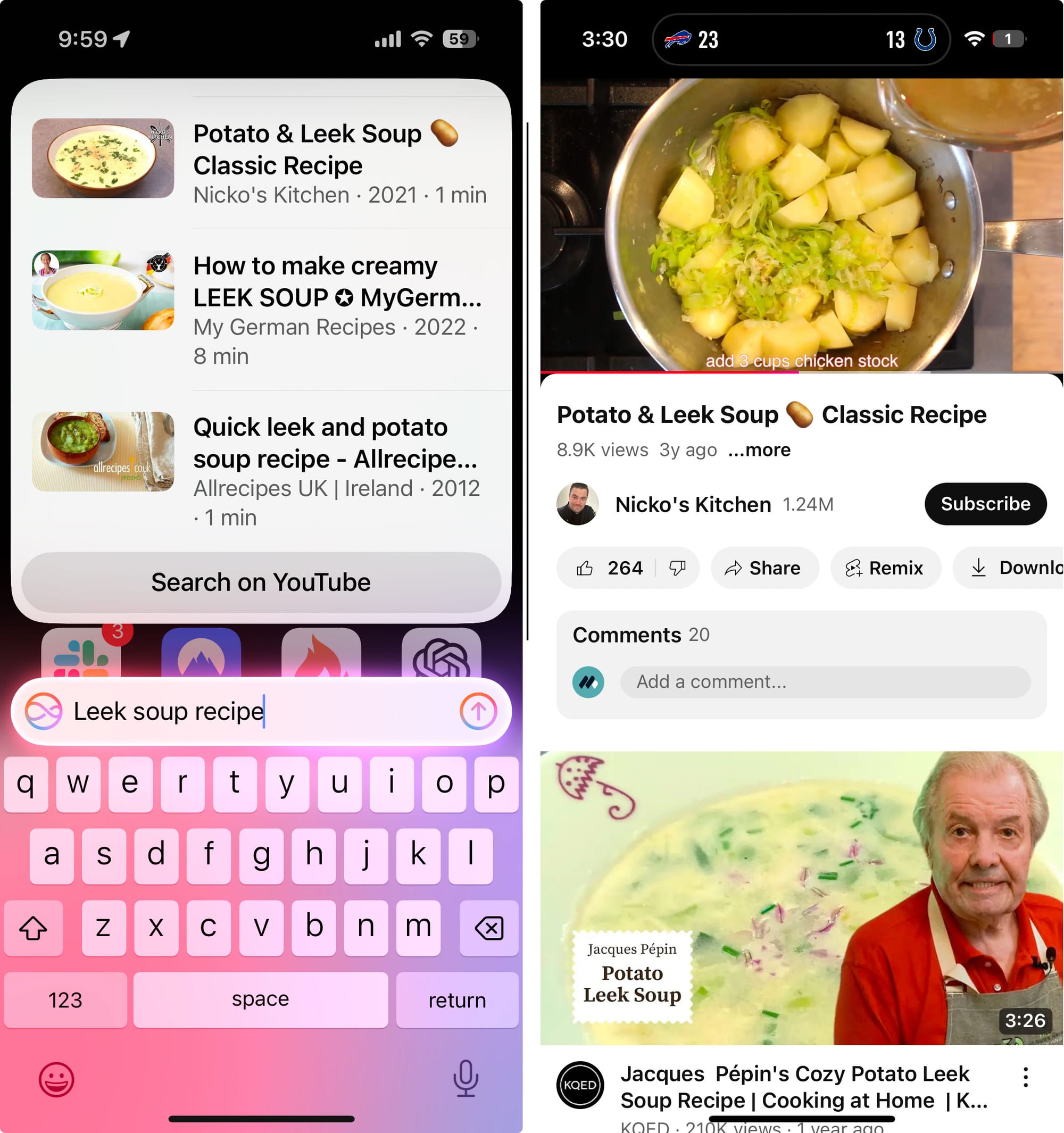

One of the more reliable results Siri now returns are YouTube recipes when you are looking for cooking ideas.

There are rumors of an Apple smart home display coming next year and it makes sense, if it sits in the kitchen, that it have voice inputs/controls and video out for recipes. Clearly Apple could have partnered with the NY Times or Cook's Illustrated, and might still. While a text recipe might be most people's current modus operandi, I could envision video being a better delivery mechanism for a kitchen based home display if the interface, as is rumored, is primarily voice.

As the world's second largest search engine and the largest search source not under Department of Justice scrutiny, YouTube might just be buying the space.

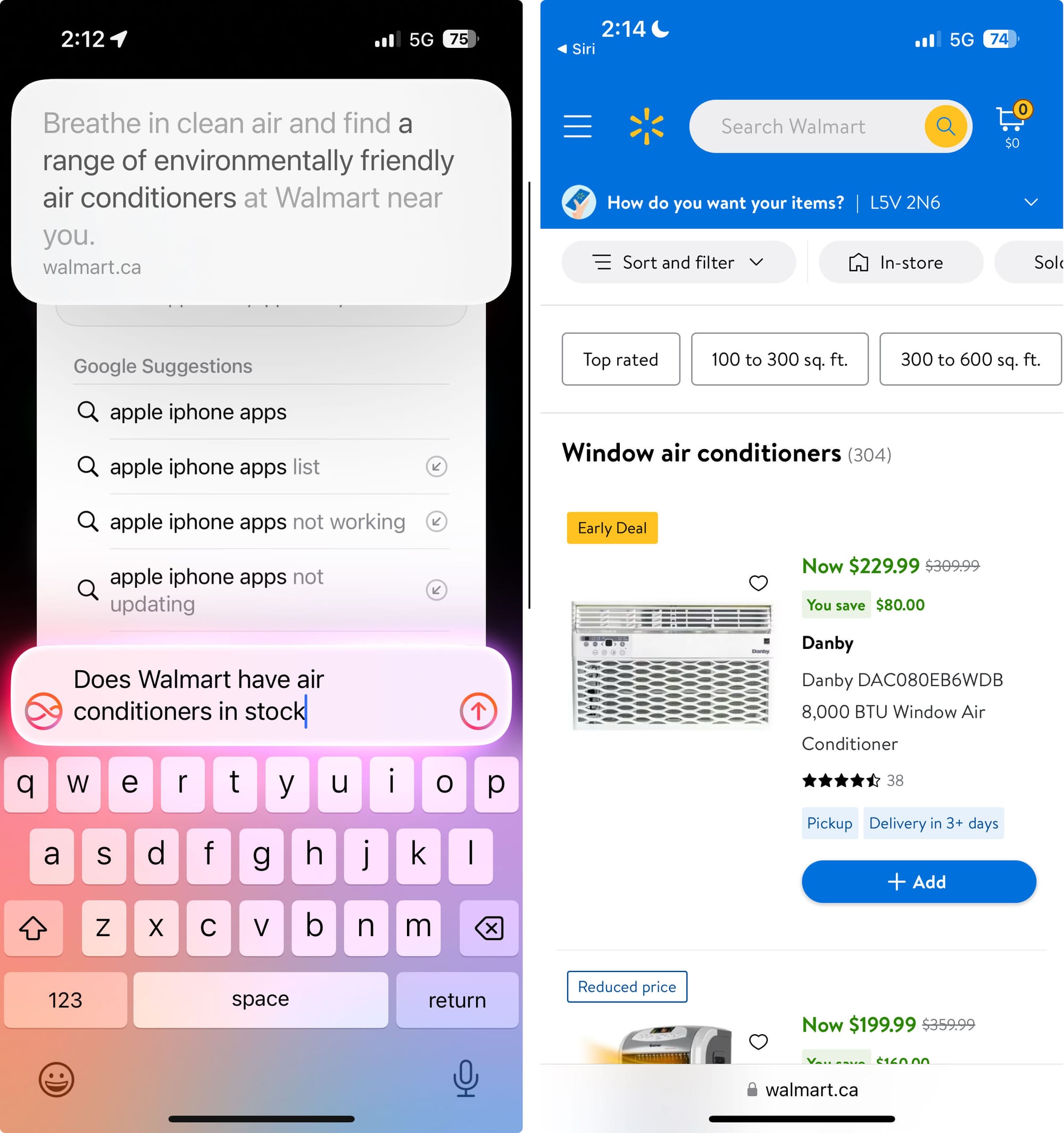

Product Search – Hello

Products are obviously something that folks search frequently on Google and Amazon. I was surprised when Siri, which is supposed to deliver primarily personal and not "world knowledge," actually returned a usable result when I asked "Does Walmart have air conditioners in stock?." It gave me a brief on-screen card and then took me directly to Walmart's air conditioners page. These types of Siri product inquiries are currently quirky and unpredictable and may not be answered correctly or usefully. It is not clear if this result is just a fluke of the beta or a direction that Apple is planning on taking.

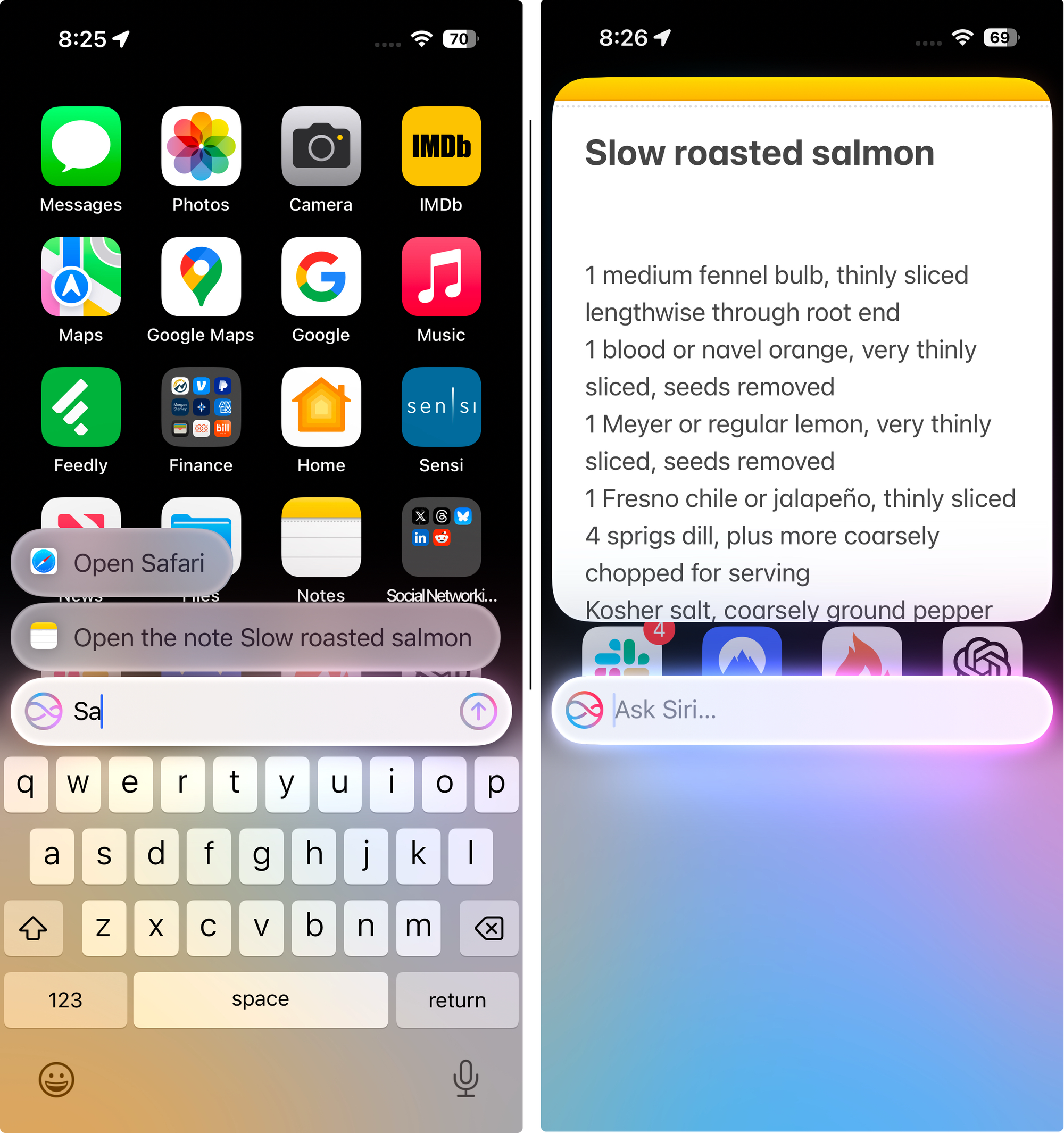

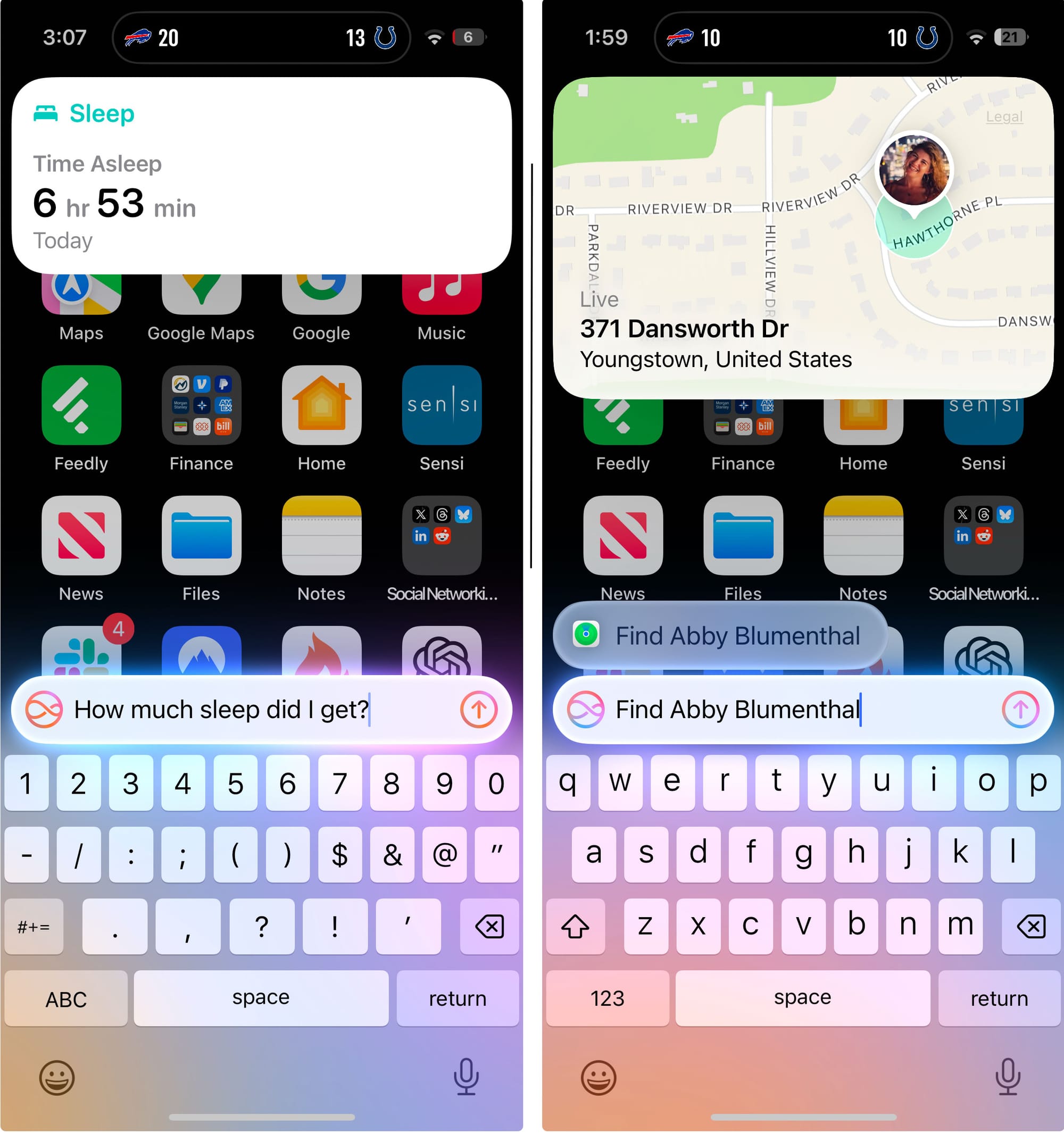

Siri Gets Personal

To reach its full potential as an AI agent Siri needs to "know us." It can currently access your Calendar, Reminders, Health, FindMy, Message, Notes, Mail and can quickly surface information on-screen without the need to enter the app.

Knowledge Graphs Are Hard

The hard part of Knowledge Graphs isn't building them but maintaining them. Google relies on web scraping and UGC to maintain theirs. Apple seems to be generating theirs by partnering with data partners, trusted web resources and news organizations. While Apple's approach might limit them to fewer graphs it tends to avoid the abuse problems of that Google often sees with UGC. And as we have seen with the recent election results and their new Sports app, they are capable of handling real time and near real time information.

For the foreseeable future, when Siri 2.0 can't answer a given query, Apple will pass off the query to ChatGPT. And there are rumors of Siri gaining significantly more ChatGPT like conversational, generative AI features by 2026. It remains to be seen whether Apple's in-house AI models will simply compliment or replace ChatGPT.

ChatGPT will likely remain and continue to do the types of things that a "world-knowledge" LLM can do that – which are potential brand risks for Apple – while Siri will apply any new AI/agentic capabilities to improving understanding and results from Apple's many knowledge graphs.

KGs + AI + R.A.G. = Brand Promise

Google has no problem testing AI in the main search results on real users and getting whacky results. That is the Google way. Apple on the other hand believes that a MVP should be a reasonable first stab and not doing crazy stuff. They experienced the Apple Maps debacle and seem to want to avoid that embarrassment again.

One of the reason for Apple's slow roll-out of AI is wanting to give users solutions in a way that keeps Apple's brand promise of a tool that helps and doesn't hurt. AI LLMs currently can't keep that promise. Whatever Apple is building towards will do just that.

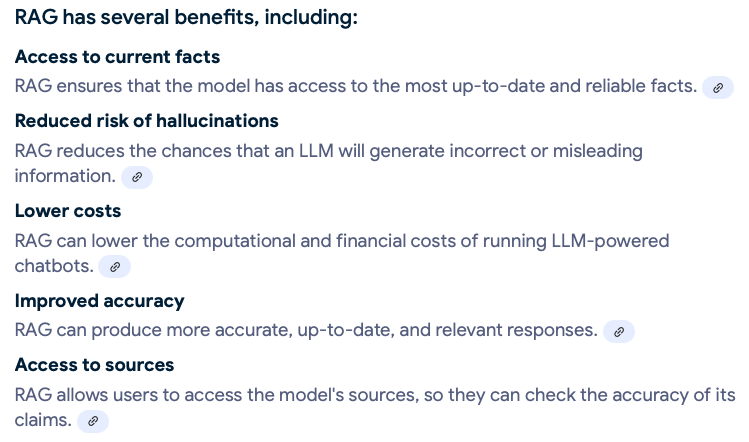

When Apple does roll-out their own LLM, they will likely infuse their AI with their knowledge graphs using a technique known as RAG (Retrieval-Augmented Generation) to create reliable and predictable outcomes. RAG is a natural language processing (NLP) technique that combines the strengths of both retrieval- and generative-based AI models.

Imagine future queries that combine and articulate knowledge from discreet graphs: "Provide driving directions to theaters showing new movies with the stars of Shrinking" or "Find the best Indian restaurants in Brooklyn or Queens that have been reviewed in the NY Times" and "Indicate how long it will take to get to them via public transportation".

What is Apple doing?

Is Apple building a search engine? The answer to that is no – at least not in any traditional sense. There are lots of reasons we won't see an Apple search engine that functions like Google in the next few years. And we may never see one.

First and foremost, Google's appeal of the DOJ antitrust victory could take up to 5 years. Google will cling to their default-search position on the iPhone as long as possible, as will Apple. Why? Because it provides both significant data advantages and income to Apple.

Second, Apple's approach to AI is, in typical Apple fashion, intentionally slow and stepwise. Many of the currently announced AI features will not be available until after the first of the year. Due to RAM and speed constraints, the AI features will only run on a few current iPhone models.**

We don't know which new models besides the iPhone 16 series will be capable of running Apple Intelligence, although it seems likely to reach across the product line within 12 months. Given that users hang on to their iPhones for an average of 4 years, it could still take 3 years for Apple to have Siri 2.0 AI capacity available to the majority of their user base.

Finally, Apple doesn't want to compete directly with a resource-rich company like Google. It would appear that the path that they have chosen, to bring results closer to the user as an integral part of the operating system, allows them to "think different" about search.

How Will Apple Replace $20 Billion?

The DOJ has recommended that Google no longer be allowed to buy the default search position on the iPhone. But the potential loss of the Google default search payment, doesn't mean Apple still can't charge search engines for access, it just needs to be non-exclusionary.

It would seem, though, that Apple has a longer-term view of replacing this income by selling subscriptions around their Knowledge Graphs and increasing ad revenue.

Apple News, Music, TV, Gaming, and Sports Knowledge Graphs are all supported by Apple and third party subscriptions. Currently Apple technology "makes advertising possible on the App Store, Apple News, Stocks, and Apple TV." With Apple moving into News Ad sales directly, it could foreshadow similar in-house ad efforts around other Knowledge Graphs as well. Local is an obvious fit, as would be Music, Books and Gaming. Apple is continuing to add new Knowledge Graphs as well. They are currently hiring for Integrations Specialist - Search Ads.

Apple currently has an installed base of 2.2 billion users, which is growing at about 10% a year. That base will, over the next 3 to 4 years, continue to increase in appeal to advertisers. If Google can't pay Apple directly, due to judicial decree, Apple will have little difficulty gaining additional income from other companies that desire access to this valuable user base.

Between subscriptions, ad sales and other third party deals we won't have to worry about Apple losing Google's payments. And with an interface that obviates the need for search to a large degree, Apple seems to be skating to a future puck position so that users won't really miss Google when the full transition takes place.

Is Siri Sherlocking Search?

What Apple seems to be doing is adding a increasingly sophisticated AI based information retrieval to the system level of the phone. Information that once took a specialized search engine or app to retrieve can now be answered directly from a query from any screen of your iPhone.

Apple also continues to add new Knowledge Graphs. In November they added a Classical Music app to the iPhone and CarPlay and enabled Siri to access and retrieve its contents. They are also, with iOS 18.2, allowing third party apps to better integrate with Siri 2.0 results via app events.***

The arrival of ChatGPT integration will both expand the types of answers Siri can provide and offer a way to keep users engaged with Siri. As Apple expands its definition of what information a personal device should provide and how to securely provide it, the company will continue to bring more query results on device.

Will This Siri Succeed?

Consumers are currently making 1.5 billion Siri queries a day but many users, particularly on the iPhone, have been deterred by Siri's inability to properly understand more in depth queries: the "brittleness" of voice search and the limits of voice as an information retrieval interface.**** It's early days for Siri 2.0 and Apple Intelligence. Despite already shipping, it's still in beta. The new Siri has improved its ability to understand me, although when the environment is loud it still has problems extracting my voice from the background.

What Apple brings to the Siri party, is a broad ecosystem of devices where Siri could be a game changer. For users of Apple TV, AirPods and HomePods, voice has largely been effective as an interface. But voice isn't always an ideal interface. To that point Apple has built out a Type-to-Siri interface that is likely to become popular on the Mac and to some extent on the iPhone as well.

Accessing the Type-to-Siri interface on both the Mac and the iPhone is not obvious. On the iPhone it requires a double tap at the bottom of the screen and on the Mac requires fiddling with the accessibility settings to make it appear. There is also the confusion of the relationship between Spotlight search, available on all iPhones, and the full Siri 2.0 capability currently available on the iPhone 15 Pro and above.

Given that the Type-to-Siri 2.0 interface is a pixel for pixel match for Spotlight, it is safe to assume that Spotlight will be replaced at some point.

To succeed, Apple must maintain interest and engagement with Siri 2.0, while rolling out not only new phones with improved UI access but also new devices (e.g., the smart home device), that engage users with Siri across the ecosystem. They must do this while overcoming some reluctance toward voice search on phones in general and Siri in particular. A tall order, but a company that can bring Apple Maps back from the brink seems to have the chops to do this as well.

How Marketers Should Respond

We are in a period of epochal change. Generative AI is literally the bridge to the future. As Rand Fishkin's recent research pointed out, search has already changed a great deal. Brands are increasingly important and content alone is no longer a guaranteed path to client acquisition.

Google is saying search will ‘change profoundly’ in 2025. Apple appears to be betting that search is undergoing radical change and, within four years, this will be evident to all.

While much will change much will stay the same: Apple and Google are likely to control many of the devices we use, Google search habits will die hard and branding will retain its role as critical in building out a client base wherever consumers end up.

Much is unknown and there will be much to learn for marketers. Who will dominate generative AI?; Will Apple present real opportunities? And if they do, How do AI and Apple even choose the winning answers?

At the meta level, marketers need to accept the fact that search is going to become more fragmented. It is likely that Google will slowly lose out as the singular place for consumers to get answers (arguably that's already true). Amazon has already shown how that happens with products; Apple and ChatGPT are showing a way forward for informational queries that is likely to increasingly cannibalize Google. And Google will cannibalize itself with AI.

The need for a well designed website that answers customers key questions will remain key. Branding IRL and outside of Google is increasingly important.

Follow the path of the current crop of generative AIs to see which of them is impacting referrals. ChatGPT has done a great job building out visibility but Google will not go gently. ChatGPT will get a boost from Apple integration but that benefit might be short lived as Apple has made clear that other gen AIs are welcome.

In the Local space, you should stay up with Apple Business Connect and develop reporting to understand your success. This is particularly true if you cater to high-end clientele or are in the hospitality fields, where we are seeing significant Apple Maps traffic.

Nobody said digital marketing would be easy, but it's certainly going to be exciting. That's for sure.

*Sherlocking, a term made infamous when Apple put the search tool Sherlock out of business by integrating it's features, is really just technology evolution in action. It makes sense over time and increasing capabilities of the devices to take the functionality of the apps and build it into the hardware (think the new photo action button on the iPhone 16) or, more commonly, the integration of information retrieval into the OS. Much of what Google does would be better served by being delivered easily and transparently to the user.

**Apple Intelligence with Type to Siri is available on iPhone 16, iPhone 16 Plus, iPhone 16 Pro, iPhone 16 Pro Max, iPhone 15 Pro, iPhone 15 Pro Max, iPad with A17 Pro or M1 and later, and Macs with M1 processors and later.

***You can experience Siri App Events now if you have iOS 18.1, an iPhone 15 pro or greater and either CarPlay and/or Airpod Pros and Apple Maps. If you ask for a local category suggestion using voice, Siri will suggest the first of several locations that fulfill the query and will follow up with a question as to whether you want that location. With the Airpod Pros,if you shake your head left to right for "no" it will then ask the same for the second location.If you shake your head for "yes" it will then ask if you want it to call the location or provide driving directions.

****This conversation with John Giannandrea, previously the Google AI lead who went to Apple due to weak leadership at Google, is worth listening to. He details why voice search has failed to this point and with LLMs has a chance for broader adoption.