EP 207 - Trust Before Clicks: What Google’s AIO UX Study Reveals About Real User Behavior

Users aren’t clicking like they used to—and trust is the new filter. In Part One of our deep dive, we unpack what a first-of-its-kind UX study reveals about how people really interact with Google’s AI Overviews. Spoiler: skimming wins, reviews matter, clicks lie.

The Podcast Deets (Join us for part 2 next week)

00:00 – Why Behavioral Research on Google’s AIO Was Necessary

01:17 – Clickstream Isn’t Enough: Rethinking User Data

04:10 – How the Study Was Designed: Tasks, Tools & Tracking

07:55 – Who Participated: Demographics & Statistical Rigor

09:00 – What Users Were Asked to Do (and Why It Mattered)

11:45 – When AI Overviews Appear—and How People React

12:41 – Trust Before Relevance: A New User Search Model

15:34 – How Awareness and Reviews Signal Trust

19:03 – Skimming, Risk & Behavioral Patterns in the SERP

22:47 – Clicks Are Dead Weight: What Really Drives Decisions

🧠 Trust Over Clicks: How People Really Use Google’s AI Overviews

Let’s cut through the noise: Google’s AI Overviews (AIOs) may look fancy, but the way people interact with them is anything but magical—and it’s not what SEOs have been telling themselves.

In Part One of this podcast, Kevin Indig and Eric Van Buskirk break down their first-of-its-kind behavioral UX study. The big idea? Clicks lie. Trust tells the truth.

📊 The Study That Needed to Happen

We’ve had endless clickstream data. What we haven’t had is actual footage of real users navigating real Google queries. This study fixed that.

- ✅ 70 participants from Prolific

- ✅ 8 real-world tasks (local, health, shopping, etc.)

- ✅ Chrome plugins or mobile apps tracked behavior

- ✅ Annotators logged scrolling, dwell time, and comments

- ✅ 30 hours of footage, 13,000 words of notes

This wasn’t “ask people what they did.” This was: watch people do it.

🔍 Clicks Are Not What You Think

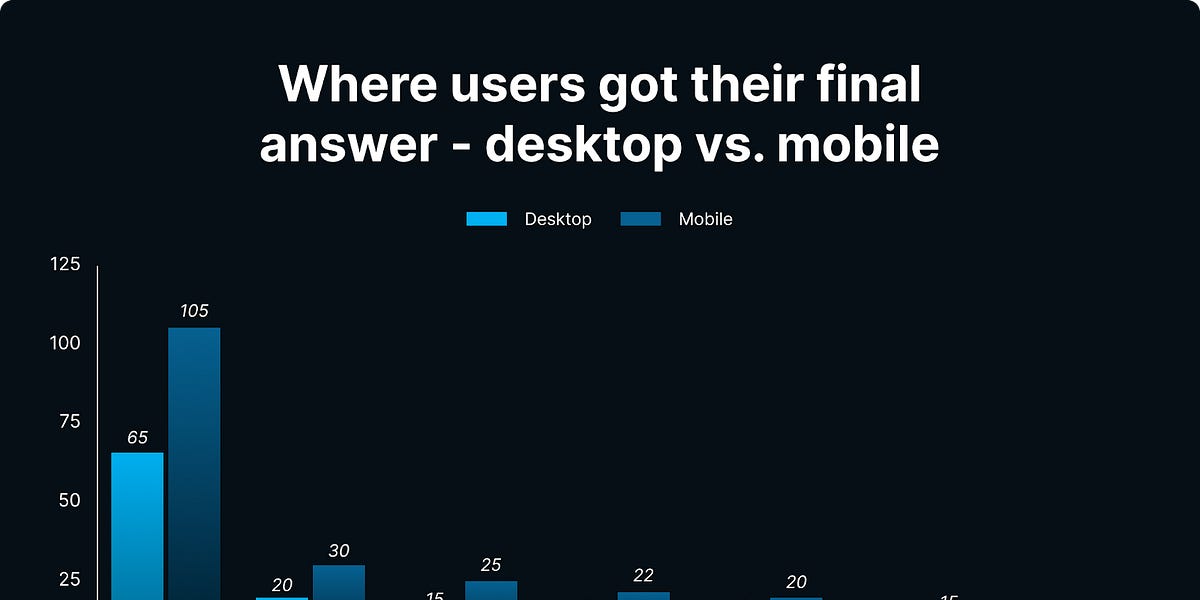

Forget the old SEO gospel that clicks = success. This study proves it’s not about clicks—it's about final answers.

Users clicked to validate or skim. The majority of decisions were made without clicking. And only about 20% of “final answers” came from AIOs. Most came from good ol’ organic links.

Clicks are just noise. Final answers are the signal.

💥 Trust Comes First. Relevance Comes Second.

Kevin’s mental model of search got flipped. It’s no longer:

“Which result answers my question best?”

It’s now:

“Do I trust this source?” then “Is it relevant?”

People scroll past perfectly good content if the brand isn’t familiar or trusted. If they don’t know you, they won’t click you.

🌟 Stars = Trust. Everywhere.

From local to shopping, reviews are king. People scroll specifically looking for stars.

- Don’t have them? You’re skipped.

- Only two reviews? You’re sketchy.

- Well-reviewed on Yelp or G2? Jackpot.

In short: Review presence = legitimacy.

📉 AIOs Are Skimmed, Not Studied

86% of users skim AIOs.

They glance at the top, rarely scroll, and treat it like a TL;DR blog post. Only when the stakes are high—like medical or financial topics—do people slow down and actually read.

AIOs aren’t the end-all, be-all. They’re the new intro paragraph.

🧪 Risk Changes Everything

In high-risk searches, people:

- Scroll more

- Click to fact-check

- Linger in AIOs

- Cross-reference citations

In low-risk searches (shopping, storage units), they go faster and let price or reviews steer the decision.

Trust = the deeper the risk, the deeper the research.

📱 Users Don’t Stay on Google

Sure, AIOs might “answer” a query. But users still go to:

- YouTube

- Amazon

- Maps

The modern search journey is multi-touch and cross-platform. Google’s not the destination—it’s just one stop along the way.

🙅♂️ Users Don't Click on AIO Icons (or Much of Anything)

Those tiny link icons in AIOs? People ignore them. Even panels with 20 clickable links barely get touched. Why? Because users don’t feel like they’re supposed to.

Most treat AIOs like static answers. They want to validate, not engage.

🧠 Users Are Skeptical—But Still Satisfied

Here’s the twist: users double-check AIOs… and still report high satisfaction. Even when they weren’t sure if the info was accurate, they walked away feeling good about it.

Trust isn't always rational. It's about perceived completeness, not factual precision.

🤫 Google Benefits From the Confusion

Kevin and Eric argue that Google has long benefited from the false belief that it’s the entire search journey. The truth? It never was.

Google gives almost no data back to SEOs—and that fog of uncertainty keeps the illusion alive. Meanwhile, the real decision paths often happen off-platform.

🏁 Final Word (for Part One): Trust is the New CTR

Whether it’s through brand familiarity, review signals, or search presence across platforms, trust has become the gatekeeper.

So stop obsessing over blue links and start asking:

“Does my brand show up where users already trust the answer?”

Because in the world of AI Overviews, being seen is not enough.

You need to be believed.

Related Links